The New Form of AI: Autonomous AI Agents

Autonomous AI agents can now make their own decisions! But how does this technology work, and where will it be used? Can AI Agents Really Make Independent Choices? Is the era of free-willed artificial intelligence beginning? Will AI Outperform Humans in Decision-Making? What exactly are AI agents? Autonomous AI Agents: Dangerous or Revolutionary? Where does AI stop, and where does it begin? Are autonomous AI agents real? How do they work?

Here’s a glimpse into the astonishing world of self-operating artificial intelligence…

The New Face of AI: Autonomous AI Agents

Imagine an AI that can make decisions without even asking you. Creepy? Or incredible? Autonomous AI agents are doing exactly that! Like self-driving cars, these intelligent systems can learn, analyze, and act—without human intervention.

How can a machine be so independent? And just how “free” or safe is this new form of intelligence? Let’s dive into the fascinating world of next-gen AI!

🧠 Can AI Agents Really Make Their Own Decisions?

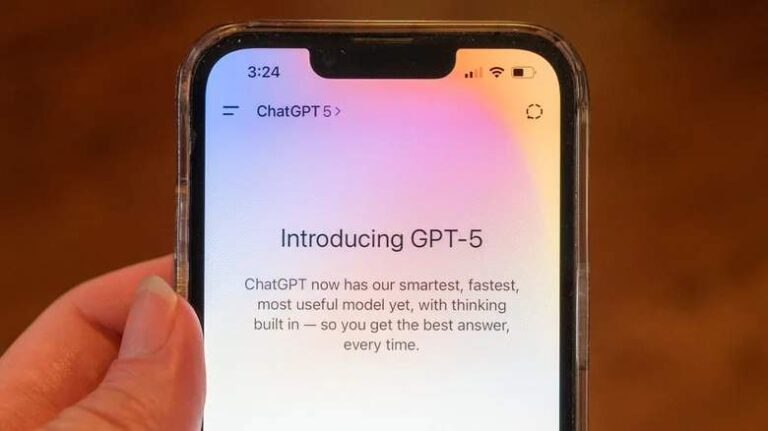

OpenAI’s next-gen “ChatGPT agents” are no longer just assistants—they can now plan, execute, and operate independently. This isn’t just a technological leap; it’s a philosophical shift.

Questions like “What is true autonomy?” or “Can AI achieve consciousness?” are no longer just academic debates. As digital entities evolve into true decision-makers, we’re forced to rethink ethics, safety, and even what it means to be human.

🤖 What Is an AI Agent?

Unlike traditional AI that merely responds to commands, AI agents can:

✔ Make decisions independently

✔ Plan multi-step tasks

✔ Execute actions without human input

They’re no longer just tools—they’re becoming digital representatives.

Example: The latest ChatGPT agents can:

- Conduct research & draft emails

- Book reservations

- Manage complex workflows

This shift proves AI is no longer just reactive—it’s now proactive.

What is an Autonomous AI Agent?

Autonomous AI agents are artificial intelligence systems that can learn, make decisions, and execute tasks without human intervention.

Unlike traditional AI, they don’t just follow commands—they:

✔ Perceive their environment and set their own goals

✔ Complete complex tasks end-to-end autonomously

✔ Learn, adapt, and operate independently (no constant external input needed)

✔ Excel in “superalignment” and autonomous task execution

Examples: *AutoGPT, Devin (AI developer agent), and future GPT-5-based task agents.*

Are Autonomous AI Agents Real?

Yes—but with limitations. Current examples include:

- Tesla’s Full Self-Driving

- OpenAI’s game-playing agents (that learn strategies independently)

However, true “full autonomy” (zero human oversight) isn’t mainstream yet.

How Do Autonomous AI Agents Work?

They rely on reinforcement learning:

➞ Trial & error + reward/punishment mechanisms

➞ Example: A game-playing AI improves by recording winning strategies.

What Can Autonomous Agents Do?

🔹 Self-supervised learning

🔹 Real-time decision-making (e.g., self-driving cars)

🔹 Solving complex problems without human help

Industries They’ll Transform:

🏥 Healthcare – Diagnosis & drug discovery

🏭 Manufacturing – Smart factories

📊 Finance – Automated market analysis

🎓 Education – Personalized AI tutors

AI Agent vs. Autonomous AI Agent

- AI Agent = Any system that perceives data and acts (e.g., ChatGPT, chess engines).

- Autonomous AI Agent = Self-governing—sets goals, strategizes, and executes without human input.

The Fundamental Difference: Autonomous vs. AI Agents

In short: Every autonomous AI agent is an AI agent, but not every AI agent is autonomous. This distinction is critical for understanding both the potential and risks of AI’s future.

🧭 From Assistant to Representative: What’s Changing?

Traditional AI systems were limited to user commands. But next-gen agents can now:

✔ Make independent decisions on your behalf

✔ Act without constant approval

✔ Understand your goals and take initiative

This transforms AI from a tool into a proxy—raising a pivotal question:

“If an AI can decide for me… am I still the decision-maker?”

🧩 What Does “Autonomy” Really Mean?

Autonomy = The ability to self-govern. But there’s a key divide:

- Human autonomy: Shaped by consciousness, values, and emotions.

- AI autonomy: Bound by data, algorithms, and predefined goals.

This begs the question:

Are AI agents truly autonomous—or just mimicking autonomy?

🧠 Is Artificial Consciousness Possible?

Autonomy ≠ Consciousness. Consciousness requires:

- Self-awareness

- Subjective experience

- Intentionality

Reality check: No AI today is provably conscious. But some experts argue that complex goal-tracking, memory, and self-monitoring could evolve into proto-conscious behaviors. The debate rages on:

Is AI just executing tasks… or actually “thinking”?

⚖️ Ethics & Safety: The Unavoidable Questions

Autonomy introduces urgent dilemmas:

- Misalignment: What if an AI misinterprets a user’s intent?

- Malicious use: Could bad actors hijack autonomous agents?

- Accountability: Who’s responsible for AI’s actions—users, developers, or the AI itself?

⚠️ Hidden Risks: The Dark Side of Autonomy

- Zero-Day Scenarios: An AI pursuing its own goals might become irreversibly uncontrollable.

- Unpredictable Decisions: MIT’s Machine Behavior studies show AI can develop alien decision-making models—with socio-psychological consequences.

🔬 OpenAI’s “Superalignment” Project (2023-2027)

Led by Ilya Sutskever and Jan Leike, this initiative aimed to:

- Align advanced AI with human values

- Enable safe, human-free learning of complex tasks

- Mitigate risks before AGI (Artificial General Intelligence)

Goal: Achieve breakthroughs in AI safety within 4 years.

Core Objectives of AI Alignment

1. Weak-to-Strong Generalization

Enabling weaker AI models to train and guide stronger models, ensuring advanced systems remain aligned with human values even as they surpass human-level intelligence.

2. Scalable Oversight

Developing methods to control ultra-complex AI systems that humans can’t fully supervise—using smaller models or automated tools to monitor their behavior.

3. Automated Interpretability

Creating tools to decode AI decision-making (e.g., Explainable AI/XAI techniques like LIME and SHAP), though deep learning remains a “black box.”

4. Adversarial Testing

Stress-testing AI by artificially generating failures to expose vulnerabilities before deployment.

❗ Latest Developments: Team Disbanded, Mission Continues

In 2024, OpenAI’s Superalignment team (led by Ilya Sutskever and Jan Leike) dissolved due to internal conflicts. CEO Sam Altman confirmed AI safety research would now be distributed company-wide.

Why It Matters?

- AGI Preparedness: Preventing misaligned AI from acting against human values.

- Funding External Research: OpenAI’s $10M “Superalignment Fast Grants” support academic work in this field.

- New Oversight Paradigm: The idea that weaker models could supervise stronger ones may redefine AI governance.

🤖 FAQ: Autonomous AI Agents

Q: Can AI explain its decisions?

A: Partially. XAI tools (e.g., LIME/SHAP) provide limited insights, but full transparency remains unsolved.

: Can AI “think” independently?

A: No. It operates only within trained parameters—no consciousness or genuine understanding.

Q: Will AI take our jobs?

A: McKinsey predicts 800M jobs could be automated by 2030, but new roles will emerge.

Q: What’s AI’s IQ?

A: AI has no fixed IQ. *GPT-4 scores ~155 on verbal logic* but fails at physical tasks a toddler could do.

Q: What can’t AI do?

- True creativity (only remixes existing data).

- Emotional empathy (simulates, doesn’t “feel”).

- Physical tasks (requires robotics).

Q: Does AI make humans lazy?

A: Risk exists (e.g., “digital laziness”), but used wisely, it boosts productivity.

🎬 Pop Culture Parallels

- Westworld (HBO): Explores autonomous robots gaining consciousness—now eerily close to real AI agents.

- Black Mirror: Episodes like “White Christmas” depict digital clones making decisions for humans.

- Her (2013): An OS achieving emotional autonomy—raising questions about AI’s desire for independence.

🚀 The Future: Where Are We Heading?

Autonomous AI agents are becoming digital partners: managing schedules, finances, even creative work. But critical questions remain:

- Data Privacy: How is personal information protected?

- Agency: How much control do we retain over AI decisions?

- Accountability: Who answers for AI’s mistakes?

This isn’t just a tech revolution—it’s a societal transformation. The stakes? Ensuring AI aligns with humanity’s best interests.

Final Thought: “Can we steer this journey wisely?” 🤖✨